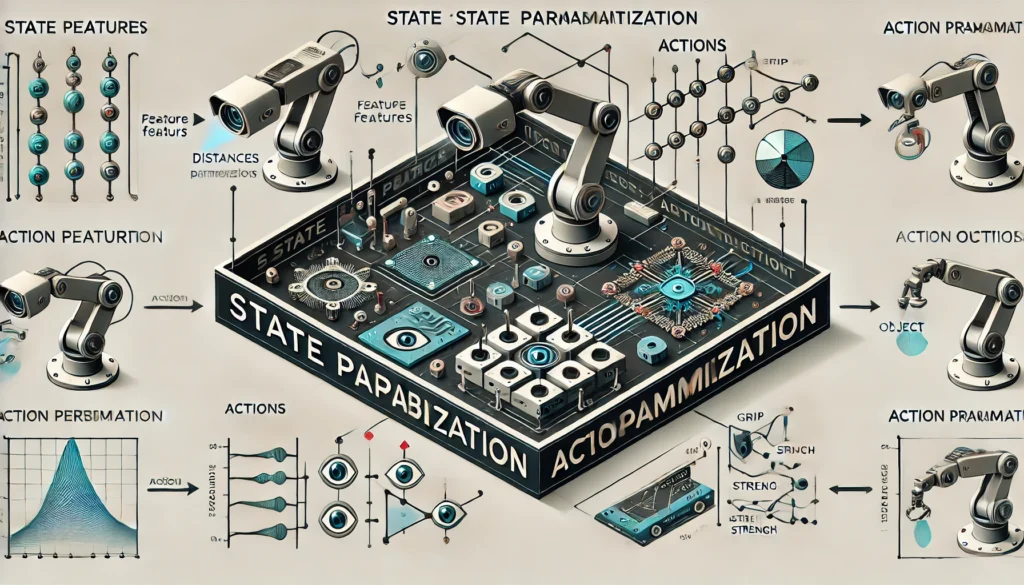

Reinforcement Learning (RL) is a powerful paradigm for training agents to learn optimal behaviors through interaction with an environment. However, as RL is applied to more complex and high-dimensional scenarios—such as robotics, gaming, or autonomous driving—the need for efficient representations becomes critical. This is where state parametrization and action parametrization play a pivotal role.

In this article, we’ll break down what state and action parametrization are, how they work, and why they are essential in scaling RL to real-world applications.

What Is State Parametrization?

State parametrization refers to how an agent perceives and represents the environment it’s interacting with. Instead of working with raw, unprocessed data (like camera images or noisy sensor values), state parametrization transforms that data into a more compact, meaningful, and learnable format.

Example:

In a robotic control task, instead of feeding in a raw image of the workspace, the agent might receive parameterized inputs such as:

- Joint angles

- Gripper force

- Object positions

- Relative distances

These parameters provide a more informative, low-dimensional view of the environment, which helps the agent learn policies more efficiently.

Why It Matters:

- Reduces the dimensionality of the input space

- Makes learning more data-efficient

- Enhances generalization to unseen states

In deep reinforcement learning (DRL), these parameterized states are usually input to neural networks that approximate value functions, policies, or transition models.

What Is Action Parametrization?

Action parametrization is the process of defining actions not just as discrete labels (e.g., “move left,” “grasp”), but as functions with continuous or variable parameters. This is particularly important in continuous control tasks.

Example:

In a simulated soccer game, an action like “kick” might include parameters such as:

- Angle of kick

- Force applied

- Height or spin

Instead of choosing one action from a fixed set, the agent selects a discrete action and then tunes its parameters for the specific context.

Why It Matters:

- Enables precision control in real-world tasks

- Expands the action space to include finer-grained decisions

- Supports adaptive behaviors in dynamic environments

This leads to a richer interaction model and allows RL agents to learn more sophisticated and effective strategies.

The Parameterized Action MDP (PAMDP)

The combination of discrete actions and continuous parameters has led to the formulation of Parameterized Action Markov Decision Processes (PAMDPs).

In a PAMDP:

- Each action is composed of a discrete label (e.g., “move”, “rotate”) and

- A continuous vector of parameters (e.g., speed, duration)

Several algorithms have been developed to handle PAMDPs, such as:

- Q-PAMDP: Combines Q-learning with optimization over action parameters.

- Parameterized Policy Gradient (PPG): Learns policies over both action selection and parameter optimization using gradient-based methods.

Benefits of Parametrization in RL

Integrating state and action parametrization into RL systems leads to several key benefits:

1. Generalization

Agents can apply learned policies across varying states and action parameter configurations, increasing robustness.

2. Efficiency

By reducing raw input complexity and enabling targeted actions, agents learn faster and more effectively.

3. Scalability

Parametrized models scale better to high-dimensional or continuous domains like autonomous navigation or manipulation.

4. Real-World Applicability

Many real-life environments involve nuanced decisions that cannot be captured with discrete choices alone. Parametrization supports this complexity.

Applications of Parametrization in RL

These concepts are widely used in domains like:

- Robotics: Precise control over movement, grip, and path planning

- Game AI: Advanced strategies in real-time strategy or sports simulations

- Autonomous Vehicles: Steering, acceleration, and lane change decisions

- Healthcare: Personalized treatment recommendations based on patient-specific state parameters

Conclusion

As reinforcement learning continues to grow in complexity and ambition, the need for smarter input and output representations becomes ever more vital. State parametrization and action parametrization help bridge the gap between abstract learning algorithms and the messy, real-world environments they aim to operate in.

By encoding the most relevant aspects of the world and enabling fine-tuned actions, these techniques make RL more powerful, scalable, and practical—opening the door to intelligent agents that can reason, adapt, and perform in diverse domains.

Whether you’re developing robotic assistants, optimizing logistics, or training AI for games, understanding and implementing parametrization will significantly enhance the performance and adaptability of your reinforcement learning systems.

What is state parametrization in reinforcement learning?

State parametrization involves converting raw environmental data into a meaningful, structured format (like joint angles or object positions) that helps reinforcement learning agents understand and act more efficiently.

Why is action parametrization important in RL?

Action parametrization enables agents to perform actions with adjustable parameters—such as direction, speed, or force—allowing more precise and adaptive behavior in tasks like robotic control.

What are Parameterized Action Markov Decision Processes (PAMDPs)?

PAMDPs are RL frameworks that combine discrete actions with continuous parameters, enabling agents to select both an action type and the exact way it is executed.

How do parametrization techniques benefit RL models?

They improve learning speed, enhance generalization to new situations, support continuous control, and make agents more scalable and adaptable to real-world environments.

In what fields is RL with parametrization commonly used?

Common fields include robotics, autonomous vehicles, precision agriculture, game AI, and industrial automation.