Multi-Agent Reinforcement Learning (MARL) is a powerful subfield of artificial intelligence where multiple learning agents operate and interact within a shared environment. Unlike traditional reinforcement learning where a single agent learns by trial and error, MARL introduces additional complexity—each agent must learn not just from the environment but also from the actions of other agents. To grasp the depth of these interactions, visual illustrations and real-world analogies are incredibly helpful.

This article dives into multi-agent reinforcement learning, emphasizing how illustrations can illuminate its core principles, learning dynamics, and applications.

What is Multi-Agent Reinforcement Learning?

MARL involves multiple agents learning simultaneously through interaction. Each agent observes its state, takes actions, and receives rewards based on its performance and the responses of the environment. However, because other agents are also learning and adapting, the environment becomes non-stationary—from any single agent’s perspective.

In MARL, agents can be:

- Cooperative: All agents share a common goal (e.g., swarm robotics).

- Competitive: Agents act against each other (e.g., game AI).

- Mixed: A blend of cooperation and competition (e.g., multi-team sports simulations).

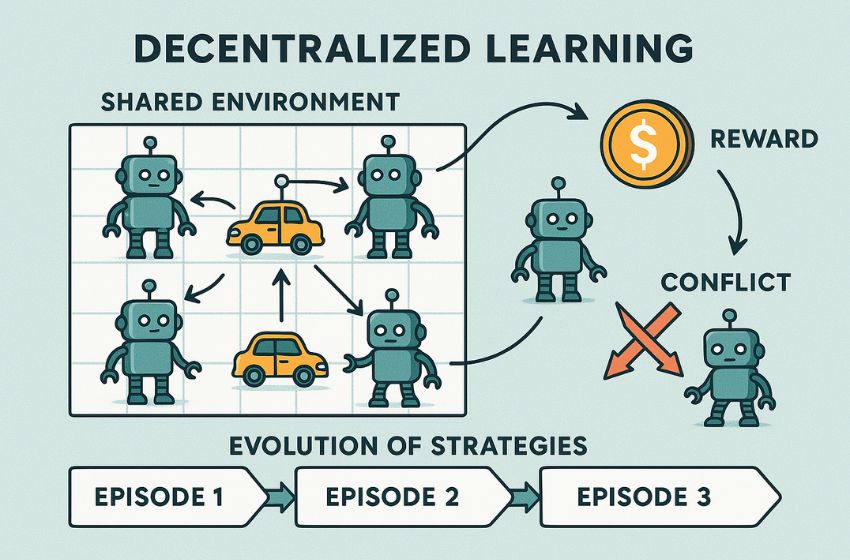

Key Elements of MARL Visualized

1. Agents and Environment

A typical MARL illustration begins with a visual of agents embedded in a shared environment. These agents receive inputs from the environment, select actions, and adjust their behavior based on outcomes.

- Imagine a grid world with robots (agents) moving around.

- Each robot senses nearby obstacles or agents and decides its next step.

- Over time, their paths improve through learned policies.

2. Agent Interactions

An essential concept in MARL illustrations is how agents influence one another. Depending on the setup, this may include:

- Communication: Visual lines or arrows indicating shared information.

- Conflict: Arrows showing opposing objectives (e.g., in a predator-prey game).

- Collaboration: Joint actions (like carrying an object together) highlighted by shared paths or rewards.

These visuals help explain how agents’ actions are interdependent, with each learning and adapting continuously.

Illustrated Examples of MARL

a. Feature Selection with Guide Agents

In one study, MARL was used for feature selection in machine learning tasks. Illustrations depicted a main agent learning the task while guide agents helped it identify relevant data features. This cooperative setup showed how MARL can refine decision-making through distributed guidance.

b. Electric Vehicle Charging Coordination

Another compelling illustration featured agents representing different electric vehicle (EV) charging stations. Each agent operated independently but needed to coordinate energy consumption to optimize the grid. These visuals showcased how decentralized agents can work collectively to manage shared resources efficiently.

c. Industrial Distributed Control

A classic application of MARL is in factory automation. An illustration might show each robot arm or control unit as an agent managing part of an assembly line. With MARL, each local unit learns its task while adapting to changes in others—illustrated through interconnected arrows and performance graphs.

Applications of MARL

Illustrations become even more powerful when grounded in real-world examples. Here are some domains where MARL and visual modeling go hand-in-hand:

- Autonomous Vehicles

Agents = cars.

Environment = roads.

Goal = minimize traffic congestion.

→ Illustrated using multiple cars navigating intersections while avoiding collisions. - Robotics

Swarms of robots in warehouse management or rescue operations.

→ Maps show robots dividing areas, coordinating in real-time. - Energy Grid Optimization

Agents represent nodes or control centers.

→ Flow diagrams show dynamic load balancing, power rerouting, and adaptive behaviors.

Learning Dynamics in MARL: Illustrated Insights

- Non-Stationarity: An evolving environment where agents continually adapt.

→ Visualized through changing heat maps or strategy shifts over time. - Exploration vs. Exploitation: Balancing new strategy discovery with known rewards.

→ Animated paths showing initial randomness converging into optimized routes. - Policy Sharing or Decentralization: Whether agents learn independently or via shared models.

→ Diagrammatic comparison of independent vs. centralized learning architectures.

Tools and Resources for MARL Illustration

- OpenAI Gym + PettingZoo: Provides environments to simulate and visualize MARL scenarios.

- Unity ML-Agents Toolkit: Used for game-based simulations where agents interact in visually rich 3D worlds.

- TensorBoard: Useful for plotting agent learning curves, policies, and reward distributions.

Conclusion

Understanding Multi-Agent Reinforcement Learning can be complex, but with the help of effective illustrations, the underlying concepts become far more intuitive. These visuals help explain how agents collaborate, compete, and adapt in dynamic environments, making MARL a fascinating and practical field in AI.

Whether you’re developing autonomous fleets, optimizing distributed systems, or building collaborative robotics, illustrations offer a window into the multi-agent world—where intelligence is not just individual, but collective.

By exploring and engaging with these visual tools, researchers and practitioners alike can better design, debug, and deploy MARL systems for a smarter, more cooperative future.

Why are illustrations useful in understanding MARL?

Illustrations simplify complex concepts by visually depicting how agents interact, learn over time, and adapt to dynamic environments. They help explain non-stationary learning, communication, and decentralized decision-making.

What are some real-world applications of MARL?

MARL is used in autonomous vehicles (traffic coordination), robotics (collaborative tasks), energy grid management, industrial automation, and game AI where multiple agents need to work together or compete.

How do MARL agents differ from single-agent reinforcement learners?

In MARL, each agent must account not just for its own actions and rewards, but also for the evolving strategies of other agents, making the environment more dynamic and non-stationary.

What tools are used to illustrate and simulate MARL environments?

Popular tools include OpenAI Gym with PettingZoo (multi-agent support), Unity ML-Agents (3D simulations), and TensorBoard for visualizing learning metrics and agent performance.